Posts tagged brainblast

BrainBlast 2010 Survey Results

2 In the first week of August 2010 we wrapped up our 4th annual BrainBlast conference. It was quite a success. After 4 years, things tend to run a lot smoother at BrainBlast than they used to. According to most of the participants, the training was great, the keynote speakers were outstanding, and the food from Sandy’s was excellent. We did a few things differently this year. One big change is that we’re making a considerable push toward Moodle in our district, having already made it available to our teachers as an online classroom management system for the past 3 years. For BrainBlast 2010, we made sure almost every secondary teacher was enrolled in a Moodle session by providing enough sessions teaching this great tool. We’re also upgrading to Windows 7 in our district this year, and provided Windows 7 training to teachers in the first phase of the transition.

In the first week of August 2010 we wrapped up our 4th annual BrainBlast conference. It was quite a success. After 4 years, things tend to run a lot smoother at BrainBlast than they used to. According to most of the participants, the training was great, the keynote speakers were outstanding, and the food from Sandy’s was excellent. We did a few things differently this year. One big change is that we’re making a considerable push toward Moodle in our district, having already made it available to our teachers as an online classroom management system for the past 3 years. For BrainBlast 2010, we made sure almost every secondary teacher was enrolled in a Moodle session by providing enough sessions teaching this great tool. We’re also upgrading to Windows 7 in our district this year, and provided Windows 7 training to teachers in the first phase of the transition.

General Statistics

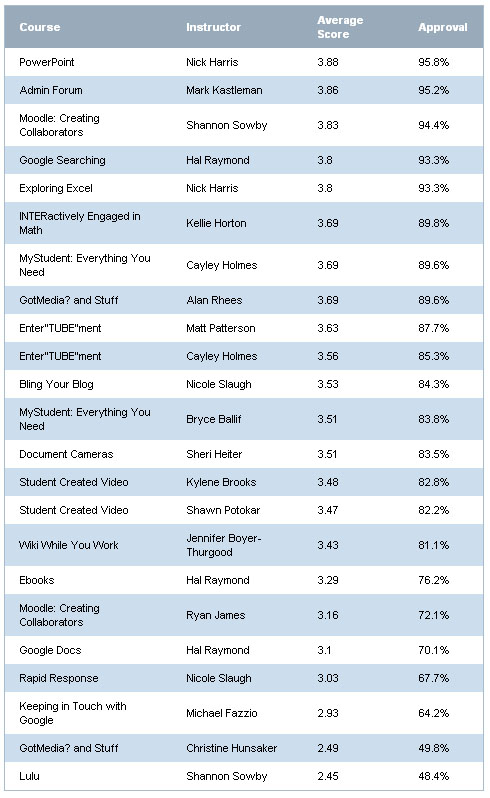

218 people participated in the survey. This number comprises 63% of the attendees, specifically 210 (70%) of the teachers, and 8 (18%) of the administrators. Table 1 indicates the highest-ranking courses, with the average scores for each on a scale of 1 to 4.

Table 1: Course Rankings and Approval

Mark Kastleman was a huge hit both in his first-day keynote session and the two follow-up sessions he held with the administrators. Barely outranked only by Nick Harris’ PowerPoint session, he delivered quite a dynamic keynote presentation about the relationship between the brain and learning. We were fortunate and appreciative that he and Mike King were able to share their insights at BrainBlast, and that Mark was able to provide follow-up sessions for the administrators.

This year we made sure to collect data on what people didn’t like about the conference. That was a major focus of the survey results, and we intentionally gathered negative feedback, which constituted most of the comments returned. We want to know what we’re not doing right. Constructive criticism will be one of the most helpful things to guide future BrainBlasts. The following information is not intended to be a “slam” against BrainBlast or anyone involved in organizing this excellent training event. Instead, I hope the comments prove helpful in optimizing and enhancing the learning experience for all BrainBlast participants. Some of the most common themes that popped up in the survey results are highlighted below.

Irrelevancy of the Classes

“I would have liked to actually been in the classes that I registered for. There were only two classes on my schedule that I wanted to be in. The others I did not register for, which was kind of annoying.”

“The hardest thing is when you are signed up for classes that you are not interested in at all. I have no desire to do Moodle and I was enrolled in the class for a second year in a row. Last year was fine, but this year I had no desire to listen. Is there a way we could let you know what classes we don’t want to take along with the ones we do?”

“Design [the] number of classes to accommodate number of students asking for them so you get the choices you want.”

“I felt that I was already pretty versed in [MyStudent] and didn’t learn anything new. I was not happy with having to take it over courses like Blogs!”

Many participants commented that they already knew the material in the classes they were selected for, or that they didn’t get any of the classes they wanted and that the material wasn’t relevant to them at all. This was an especially common concern in the Lulu class, as well as a few others. “It was not made clear how we would use the websites offered in [the Lulu] course,” one participant noted. “The online books seem too expensive to use in the classroom.” Another stated that Lulu “did not really apply to my curriculum.” “I didn’t really see the practical application of how I could use [Lulu] in class,” agreed yet another teacher. The common concern was that Lulu books tend to be too expensive for most parents.

A couple factors are directly related to the participants having been placed into classes not relevant to them:

- We did not collect much information at registration about teachers’ existing skill sets, let alone use this information to place teachers in classes that would be suitable for them.

- We did not use the wishlisted courses to determine how many sessions to offer. Last year, the average participant got 68% of the courses they wishlisted. This year, the participants only got 16%. The reason is this: When participants initially registered, they specified up to 6 courses they would like to take. They did this last year, too, except this year we did not delineate these courses by their track (elementary, secondary, or administrator). As a result, a lot of participants were wishlisting courses in tracks that would not be available to them. Elementary teachers were wishlisting courses in the secondary track, secondary teachers were wishlisting courses in the elementary track, and all teachers were wishlisting courses in the administrator track.

The bottom line is, we may as well have not even bothered with a wishlist, since it hardly determined which classes were assigned to whom.

Table 2 indicates the preferred courses per track, as well as whether or not the course was even available in the track. The second column in each table shows the number of times the course was wishlisted during the initial registration, and the third column shows how many slots we actually provided for the course for the entire conference.

Table 2: Preferred Courses vs. Available Seats (click to enlarge)

Bling Your Blog! was one of the most requested classes (246 participants requested it), yet we only made room for 2 sessions (40 participants, or 16% of the requesters), and only in the elementary track. “I would have liked to attend a course on blogs,” remarked one teacher. “That was the one I wanted the most, but didn’t get to go to it.” “Please have a course on creating blogs for beginners,” begged another, “and have enough sessions [so] all who want it can have it.” Conversely, Lulu was one of the most unrequested classes. Only 76 requested it, yet we provided room for 5 sessions (100 participants). And we provided 6 sessions (120 participants) of Keeping in Touch with Google for elementary teachers, yet only 21 in the elementary track actually requested it! It’s hardly a surprise that these two ended up as some of the lowest ranking courses in the survey results, with so many disinterested people forced into them.

Recommendations for Improvement

Even if it means we need to open up BrainBlast registration earlier to allow for more planning time, we should consider the wishlisted classes and provide a suitable number of sessions to accommodate them. However, there is still value to a strictly randomized scheduling process. Teachers may attend classes they would not have normally requested due to an unfamiliarity with the material, but once they participate in the class they acquire new knowledge and skills directly relevant to their subject, classroom, and instructional strategies. At least, that’s one factor that has driven the design and development of BrainBlast. Every class should be relevant to everyone. Clearly, we did not accomplish that this year.

We should not discount the role a teacher’s general disinterest plays. If they simply don’t care about the subject material when they walk into the class, this preconception will carry all the way through, and stifle any engagement and learning they may experience. We need to find a way to identify which courses may be most relevant to a teacher, even if they don’t know it themselves. At the very least, a middle ground should be found between encouraging teachers to explore new technologies, and supplying the correct number of classes they specifically choose and feel are most relevant to them. As one teacher helpfully recommended, “Make sure that the courses are relevant to the participant . . . the majority of the courses were not very applicable to me. Plus, I only received 1 out of 6 courses that I had selected. The ones I had chosen would have been extremely relevant!”

Separation of Beginner and Advanced Classes

“It is frustrating to be in a course and want to learn more than you already know and it is based around the beginner.”

“Within the first part of the class I had things figured out, but we couldn’t move into more depth because there were other people in there that struggled.”

“Please, please, please have a beginners track and an advanced track. It is SO frustrating to not be able to learn anything new because the instructor runs out of time due to the fact there are people in the class that can’t even open the program they are talking about. ‘Wait! Slow down! How did you open Windows Movie Maker?!’ Very frustrating!”

“Some of the presenters tried to teach too much and I was way behind and couldn’t keep up.”

“Make a track that separates those who have little / no experience and those who have more experience and want to go deeper.”

The suggestion to divide classes by skill sets was very common this year. “Organize the classes by beginner, intermediate, advanced as well as by subject,” a teacher recommended. “It gets very frustrating to be in a class where the teacher is trying to explain a more complex subject and some of the students can’t even remember how to login or what the Internet icon looks like.” “I really feel that classes need to start being identified as beginner classes and classes for more advanced learners,” commented another. “A couple of the classes attended were not as helpful because they are skills I have learned at previous BrainBlasts.”

The suggestion to divide classes by skill sets was very common this year. “Organize the classes by beginner, intermediate, advanced as well as by subject,” a teacher recommended. “It gets very frustrating to be in a class where the teacher is trying to explain a more complex subject and some of the students can’t even remember how to login or what the Internet icon looks like.” “I really feel that classes need to start being identified as beginner classes and classes for more advanced learners,” commented another. “A couple of the classes attended were not as helpful because they are skills I have learned at previous BrainBlasts.”

This is not a bad suggestion, and there’s no denying that teachers attending BrainBlast have a wide range of abilities. Some come to the conference as technology experts, others as beginners, and this creates problems and negative feelings from different people in the classroom. “Have the instructors not wait for the one or two that don’t get it or are behind to catch up with the class,” recommended one teacher. “It really wastes time for those (the bulk of the class) that are ready to move to the next step.” The in-room techs should be able to help teachers catch up so the teacher doesn’t have to stop and wait, and perhaps we need to make that role more clear to them. However, it is rather difficult to create classes that appeal to everyone, when a few people are holding things back. It also creates a poor learning experience if some participants are constantly behind and relying on the school techs to assist them, just to keep up with the teacher.

Recommendations for Improvement

There is a need to organize classes into beginner and advanced, even if these are subdivisions within the elementary and secondary tracks. Our teachers have widely varied skill sets, and if we’re going to be randomly assigning them to classes, we shouldn’t operate under the assumption that “one class fits all.” Evaluating different technical skills is tricky, but well-crafted skill assessments should occur at registration time. At the very least, the random assignments should occur within subsets of designated beginner and advanced classes.

No More Shirts!

“Please get rid of the shirts and use that money for prizes.”

“If you must keep the shirt, make it a t-shirt. They’re cheaper.”

“Use the money spent on shirts to go towards equipment to be used in the classroom.”

“Give us more useful tech stuff. I would rather have had a tripod than a shirt.”

“No more shirts, they are atrocious and they are a waste of money.”

The general disdain for shirts was yet another common manifestation. I believe there is value to making BrainBlast shirts available to all who want it, but perhaps for next year participants could opt out of receiving a shirt when they register.

Other Ideas: Workshops

“I would like to have the option of being able to take the full two days to have a working workshop in Moodle,” suggested one teacher, “so that I can come away with tangible material that I can use in the classroom.” Day-long workshops are not uncommon to technology conferences, and some will provide this option, typically on an optional last day. Other times this is done during the regular conference schedule, so the participant enrolls in day-long workshops in lieu of a diversity of classes. Generally, the idea for BrainBlast has been to provide a good range of classes to expose teachers to multiple technologies, and to set aside workshop-oriented instruction for E-volve and iLead classes. However, many participants mentioned that the class time was too short to reasonably cover the material:

“I would like to have the option of being able to take the full two days to have a working workshop in Moodle,” suggested one teacher, “so that I can come away with tangible material that I can use in the classroom.” Day-long workshops are not uncommon to technology conferences, and some will provide this option, typically on an optional last day. Other times this is done during the regular conference schedule, so the participant enrolls in day-long workshops in lieu of a diversity of classes. Generally, the idea for BrainBlast has been to provide a good range of classes to expose teachers to multiple technologies, and to set aside workshop-oriented instruction for E-volve and iLead classes. However, many participants mentioned that the class time was too short to reasonably cover the material:

“Most of the classes went by too fast, especially on wikis. I had never even heard of one and there was just not enough time to really understand.”

“Make more ‘beginning level’ classes available and expand time on some of the sessions so we have more time to ‘create’ or to produce a finished product.”

“Some classes need to spend more time on basics. Go deep instead of broad.”

“Got Media & Stuff was the weakest because it contained so much info and not enough time to explain about each site.”

“Student Created Video would have been better if there was enough time. The time was cut short due to the general session going over a bit.”

“Could have used more time in Bling My Blog….and Google…”

Whether or not longer, focused workshops would incorporate well into BrainBlast remains to be determined. With our training efforts, it would probably be more suitable to divide workshop-based courses into other training venues, such as E-volve or iLead.

Other Ideas: Virtual BrainBlast

“It would be nice to have this more than once a year,” suggested another teacher. I’m reminded of FETC, which is one of the largest annual educational technology conferences in the U.S. Most educators are probably familiar with it, but many do not know that they also host Virtual FETC conferences a couple times throughout the year. The online version of FETC is portrayed in a virtual web-based environment with INXPO’s Virtual Events & Conferences. Participants can travel around the virtual conference setting, viewing products and communicating with vendors in real-time, collecting virtual memorabilia, taking notes, and interacting with other educators online. Sessions are broadcast at set times, typically chosen from archived recordings of the last FETC, though live streams are possible, too. During the sessions, participants can, and are even encouraged to chat amongst each other about the topic being presented. Some have claimed that the best learning during a conference happens before and after the sessions, when everyone networks together to talk about what was learned, explore how they can apply the new information, and share their own insights to a group of eager-to-listen professionals in their field. Collaboration is a hugely beneficial aspect of any conference, and virtual conferences are ideal for this form of collaboration. Many participants can gather together in the same room, more than would be possible in a physical setting, and have the opportunity to collaborate with the group in a way that might not be as easily accomplished at a large meeting of educators.

“It would be nice to have this more than once a year,” suggested another teacher. I’m reminded of FETC, which is one of the largest annual educational technology conferences in the U.S. Most educators are probably familiar with it, but many do not know that they also host Virtual FETC conferences a couple times throughout the year. The online version of FETC is portrayed in a virtual web-based environment with INXPO’s Virtual Events & Conferences. Participants can travel around the virtual conference setting, viewing products and communicating with vendors in real-time, collecting virtual memorabilia, taking notes, and interacting with other educators online. Sessions are broadcast at set times, typically chosen from archived recordings of the last FETC, though live streams are possible, too. During the sessions, participants can, and are even encouraged to chat amongst each other about the topic being presented. Some have claimed that the best learning during a conference happens before and after the sessions, when everyone networks together to talk about what was learned, explore how they can apply the new information, and share their own insights to a group of eager-to-listen professionals in their field. Collaboration is a hugely beneficial aspect of any conference, and virtual conferences are ideal for this form of collaboration. Many participants can gather together in the same room, more than would be possible in a physical setting, and have the opportunity to collaborate with the group in a way that might not be as easily accomplished at a large meeting of educators.

Virtual conferences are not unusual. Second Life is another popular platform for delivering virtual conferences such as Virtual Worlds: Best Practices in Education (WBPE), SLanguages, UNC: Teaching and Learning with Technology, SLACTIONS, and others. While I wouldn’t advocate opening up Second Life to all teachers inside the district (not at this point, at least), this could be a viable platform for a virtual BrainBlast. But on the other hand, a virtual BrainBlast wouldn’t need to be so sophisticated. Just gathering teachers in a basic web-based environment where they can communicate and participate in great training has tremendous benefits. And it would be a considerably more cost-effective supplement to BrainBlast than the summer event at Weber High School.

Evaluating Learning

One obvious question we may be overlooking is, “How well do we assess the learning of the participants?” BrainBlast is designed to be a highly constructionist, hands-on learning experience. Every classroom is equipped with a computer lab, and during the sessions the participants are expected to produce concrete artifacts and gain both conceptual and procedural knowledge of specific technology tools. Yet no official learning evaluation process is in place that I’m aware of. At the very least, the instructors and school techs stationed in each room should take notes during or after the training sessions of the overall learning experience, common problems that arose, and answer questions regarding the effectiveness of the session. The direct observation would prove invaluable in determining which instructional strategies do and do not work.

There are three particular aspects we should measure in any evaluation: efficiency, effectiveness, and impact. BrainBlast is undeniably efficient: all participants are given a wide range of training within a 12-hour timeframe over 2 days. The effectiveness is a little more debatable. Do all participants leave the sessions knowing the material they were supposed to learn? Are there always clear objectives in each class that should be accomplished by each participant? While we don’t want participants to take a grueling multiple choice quiz at the end of each session to determine if they actually learned anything, we can assess their learning by viewing their completed artifacts.

However, does BrainBlast actually have an impact? After a teacher takes a blogging class at BrainBlast, do they become an active blogger throughout the school year? If a teacher receives training on using Flip Video cameras, do they start using this in their class, and finding ways to engage students with video creation? 140 secondary teachers received training in Moodle. And now, a few months later, we still only have about 30-40 teachers actively using our Moodle system. This is about the same number of teachers that were using Moodle last year. Did BrainBlast actually have an impact on Moodle usage?

Granted, we shouldn’t expect teachers to use all the educational technology tools they have learned about in their daily instruction, but currently we do not formally measure if teachers are using what they learned a month, three months, or six months after the event. From past experience, and having received the most basic questions on how to blog, upload WeberTube videos, use a document camera, etc. from teachers that knowingly have participated in BrainBlast’s related training venues, the effectiveness and impact of BrainBlast needs to be determined.

Where is Social Bookmarking?

![]() I commented on this topic 2 years ago after BrainBlast 2008. Classes on social bookmarking have been strangely absent from BrainBlast’s course offerings. Since 2007, Delicious, the most popular social bookmarking tool, has been consistently ranked as the top #1 and #2 most useful tools for learning by the Centre for Learning & Performance Technologies, which gathers data and feedback from educators all over the world. Why is social bookmarking such an important tool for teachers? There are numerous educational resources on the web, and social bookmarking is the most popular and effective way for teachers to share them amongst each other. It’s not just ordinary things like math manipulatives or quiz sheets that are shared, but links to videos, podcasts, desktop applications, helpful teacher blogs, educational trends, and bleeding-edge teaching ideas. The worldwide teacher network is opened up through social bookmarks, and our teachers can tap into this learning network by taking their bookmarks online.

I commented on this topic 2 years ago after BrainBlast 2008. Classes on social bookmarking have been strangely absent from BrainBlast’s course offerings. Since 2007, Delicious, the most popular social bookmarking tool, has been consistently ranked as the top #1 and #2 most useful tools for learning by the Centre for Learning & Performance Technologies, which gathers data and feedback from educators all over the world. Why is social bookmarking such an important tool for teachers? There are numerous educational resources on the web, and social bookmarking is the most popular and effective way for teachers to share them amongst each other. It’s not just ordinary things like math manipulatives or quiz sheets that are shared, but links to videos, podcasts, desktop applications, helpful teacher blogs, educational trends, and bleeding-edge teaching ideas. The worldwide teacher network is opened up through social bookmarks, and our teachers can tap into this learning network by taking their bookmarks online.

Saving social bookmarks is as easy as installing the Delicious add-on in your browser and clicking Save. It’s no more complicated than clicking “Add to Favorites” in Internet Explorer. Plus, you can access your bookmarks from any computer, so you don’t have to panic when you realize that cool link to the video you wanted to show your class was bookmarked on your home computer, but not your work computer. Social bookmarking is such an invaluable asset. No longer do you have to hunt for your own resources for your classroom lessons, but you can tap into the research that thousands of other teachers have done and find existing links that others have shared. New links can be emailed to you daily, or you can subscribe to RSS feeds of bookmarks shared by different users, or bookmark groups that are created around topics. 99% of Weber School District’s Links of the Day come from Delicious and Diigo (another popular social bookmarking service which has some great extra features like highlighting and annotating pages). And about 99.9% of the links that get sent to my inbox are never put up on the Links of the Day page, so it would be beneficial if teachers have access to these other links themselves.

The bottom line is this: Our teachers are going to a technology conference, to learn about tools for learning and teaching. They are sitting in computer labs and learning about great web sites. But what do they do when they want to save these links? They whip out their notebooks and pens and write them down. These could easily be saved with a simple click and the teacher could access it later from any computer. The final step in the process is absent. All the BrainBlast links could even be merged into a shared bookmark group that anyone, anywhere can access for future reference. It’s rather strange that one of the top tools for learning has never even been mentioned at BrainBlast.

Conclusion

Overall, participants were quite happy with how the conference played out. There is clearly some room for improvement, particularly in ensuring the classes are relevant to everyone, and taking unique skillsets and aptitudes into account when the participants’ classes are assigned. We must improve our evaluation procedures as well, and pay careful attention to collecting good, reliable data if we are to determine how best to improve this event. There may be some value to including an online venue to supplement BrainBlast, and making sure that all the materials are easily accessible at a later date.

And finally, the best suggestion we received from the survey:

Evaluating BrainBlast

0 I’m getting close to wrapping up my reading of a rather interesting and insightful book: The ABCs of Evaluation (Boulmetis & Dutwin, 2005). It’s been an eye-opener for me, and has caused me to rethink how a lot of our professional development programs are evaluated.

I’m getting close to wrapping up my reading of a rather interesting and insightful book: The ABCs of Evaluation (Boulmetis & Dutwin, 2005). It’s been an eye-opener for me, and has caused me to rethink how a lot of our professional development programs are evaluated.

I’ve been writing a report about the survey results we collected last month at BrainBlast, our district’s annual technology conference. It’s a fantastic event that we put on every year in the summer. Up to 300 teachers and administrators attend the conference, participate in hands-on workshops, and win cool prizes. And every year we try to get good feedback about how the year’s conference went, by encouraging everyone to take a survey.

I started my report of BrainBlast 2010’s survey back in August, without realizing what I was doing was an evaluation report. However, I’ve since realized I made quite a few mistakes in my methodology, and I’ll probably need to start again from square one. For one, my evaluation was based largely on data that was improperly quantified. We collected some ordinal data in that we prompted each participant to rate their courses as Poor, Fair, Good, or Outstanding, but then I converted these to numerical quantities — 1 for Poor, 2 for Fair, 3 for Good, 4 for Outstanding — even though the division between each level is not necessarily equal. A lot of my report was based on this faulty assumption, and I made the same mistake a couple years ago in my comments about the BrainBlast 2008 survey. As a result I’ll need to reassess the data we collected this year.

In general, the survey we administered wasn’t really comprehensive and designed with a full-scale evaluation in mind, but I’ll do the best I can. Boulmetis & Dutwin (2005) outline a good format for writing evaluation reports, consisting of sections for a summary, evaluation purpose, program description, background, evaluation design, results, interpretation and discussion of the results, and recommendations. I think this is a good model to follow for any evaluations. At the very least it will be good practice for me as I hone my evaluation skills, and next year I’ll make sure I play a more important role in how we evaluate BrainBlast. Putting on BrainBlast is a significant financial investment for the district, albeit a very worthwhile one. It’s important that we make the most of how we conduct this valuable form of professional development.

References

Boulmetis, J., & Dutman, P. (2005). The ABCs of evaluation. San Francisco, CA: John Wiley & Sons.

BrainBlast 2008: Survey Results and Musings

8 Last month, in the first week of August, the Weber School District Technical Services Department hosted the second annual BrainBlast conference. BrainBlast is a time of year when we techs get to feel like rock stars, and the whole thing was a resounding success. The keynote speakers were excellent, the techs were responsive, Vinnie’s antics were hilarious, the vast majority of the teachers I spoke to felt they were learning a lot and that the conference was worthwhile, and we amazingly managed to pull off an impromptu musical presentation on the last day for the secondary teachers.

Last month, in the first week of August, the Weber School District Technical Services Department hosted the second annual BrainBlast conference. BrainBlast is a time of year when we techs get to feel like rock stars, and the whole thing was a resounding success. The keynote speakers were excellent, the techs were responsive, Vinnie’s antics were hilarious, the vast majority of the teachers I spoke to felt they were learning a lot and that the conference was worthwhile, and we amazingly managed to pull off an impromptu musical presentation on the last day for the secondary teachers.

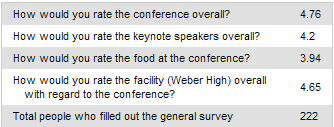

The feedback we received from the teachers we surveyed was generally quite positive. We asked everyone to answer some general questions about the conference, followed by some specific questions about each class. I’ve processed the results we received and came up with some average statistics. All the ratings below are on a scale of 1 to 5, with 5 being the highest.

Conference Ratings

Just the fact that the average ranking for the conference overall, out of 222 survey-takers, was a high 4.76 is especially pleasing. We had some great keynote speakers: Kevin Eubank, Jim Vanides, and Ken Sardoni. In retrospect, we should have had each keynote speaker ranked separately in the survey, rather than all together. We’ll make sure we do that for next year. The food from Iron Gate Grill wasn’t bad, but nothing to write home about, so I basically agree with the rating there. And the Maintenance Department did a great job getting Weber High ready. Kudos to everyone who helped put everything together.

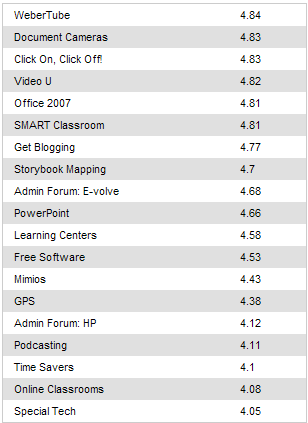

We offered 19 different classes in all. The following statistics show the most well-received classes:

It’s not surprising to see the class on WeberTube, our new media sharing site, at #1. Shawn Potokar debuted this excellent new system at BrainBlast, and it received a great response. This is what teachers have been waiting for. I’ve mentioned this before, but I believe that if we block something that’s useful for educational purposes, we’re obligated to provide an alternative. Due to inappropriate content we must block YouTube, but WeberTube is our answer to that. And in his session, Shawn even showed the attendees how to pull videos off YouTube at home and upload them to WeberTube (see this post by Justin McFarland for a summary of this method). I’ve enjoyed seeing the videos our teachers have uploaded so far this school year.

Shawn Potokar also taught the Video U class, the #4 rated class, and showed some basic tips with Windows Movie Maker. Honestly, I wasn’t all that familiar with Movie Maker, but now I regret it. Last July I was assigned the task of creating a 30-minute video compilation for my grandmother’s 90th birthday, and I wrestled with various tools, mostly Adobe Premiere, trying to slap a working product together. Movie Maker would have been so much easier, and I wish Shawn could have shown me the ease and benefits of this product sooner.

Shawn Potokar also taught the Video U class, the #4 rated class, and showed some basic tips with Windows Movie Maker. Honestly, I wasn’t all that familiar with Movie Maker, but now I regret it. Last July I was assigned the task of creating a 30-minute video compilation for my grandmother’s 90th birthday, and I wrestled with various tools, mostly Adobe Premiere, trying to slap a working product together. Movie Maker would have been so much easier, and I wish Shawn could have shown me the ease and benefits of this product sooner.

Jennifer Boyer-Thurgood presented the Document Cameras class, which came in as #2. She was also one of the key organizers of the conference, so if you thought BrainBlast was awesome, you can thank her for it. Document cameras are extremely cool and extremely useful, and it was great to see first-hand how a teacher might use them in the classroom setting. I wish they had them when I was in the public school system. I’m excited to see more and more teachers get them in our district, and hope that they can use them in creative ways.

Bryce Ballif’s clickers class came in #3. I wasn’t really too familiar with the clickers, and this is the best introduction to them I could have hoped for. What a great tool these are for classrooms! Instant responses, statistical feedback…I wish he had more time to go into advanced usages for them, or that more of the attendees could share how the clickers had impacted their teaching.

Moodle Problems

Ryan James introduced WSD Online, our own Moodle system. We launched this at BrainBlast along with WeberTube, but unfortunately it came in second to last. Some teachers commented to me that they were very impressed with WSD Online, and that there was a lot they still could learn there. This itself was the problem and the reason for the low ranking, since it implies how steep the learning curve for Moodle actually is. The steeper the learning curve, the less likely our teachers will use the product.

Ryan James introduced WSD Online, our own Moodle system. We launched this at BrainBlast along with WeberTube, but unfortunately it came in second to last. Some teachers commented to me that they were very impressed with WSD Online, and that there was a lot they still could learn there. This itself was the problem and the reason for the low ranking, since it implies how steep the learning curve for Moodle actually is. The steeper the learning curve, the less likely our teachers will use the product.

A few technical problems with our Moodle system reared their ugly heads during the conference, too. First of all, I didn’t anticipate administrators being in the class, though I should have seen it coming! All our Moodle class data is pulled from our AS400, and since only teachers have classes in the system, administrators were left with no classes to see and nothing to do. As a result, I ended up creating a BrainBlast sandbox after the first “Online Classes” session, so administrators could log in, and at least have a class to create content in.

I’m considering a solution for the administrators, possibly creating a one-click install where they can set up their own unique Moodle system, and do whatever they want with it. In effect, this would give administrators even more flexibility over their courses than teachers currently get, and they could create training courses with those both inside and outside the district, if necessary. (I’ll have to consult with upper management about this one, and hash out a few more ideas.) In the meantime, for those administrators looking for a way to implement online classes, you might want to check out WiZiQ or HotChalk.

Second of all, somehow Two Rivers High School didn’t get their classes added to WSD Online when I upgraded to version 1.9 several weeks ago — I had a few teachers track me down to voice their concerns about this. (My apologies to the Two Rivers teachers.)

Third of all, apparently Moodle spammed a bunch of teachers (sorry!). When we set up the BrainBlast 2008 forum in Moodle, we apparently didn’t turn off the “email every comment everyone posts to everyone who has touched Moodle at any point in time” setting. Why that’s turned on by default, I have no idea!

Live Streams

This is the first time we’ve streamed some of the BrainBlast sessions live over the Internet. We even set up a chat room that viewers could visit while the sessions were going on, and though we didn’t take the time to advertise this as well as we could have, since it was mostly experimental, I feel it was very useful. We had a few people come and go, and then some recurring visitors, namely Brent Ludlow from Hooper Elementary, and Mrs. Durff from my Twitter network. I was just streaming off my dinky little webcam, but maybe next year we can install ManyCam or something on the presenters’ desktops and stream better presentations that way. Equipping the presenters with a wireless mic may be a good investment, too.

This is the first time we’ve streamed some of the BrainBlast sessions live over the Internet. We even set up a chat room that viewers could visit while the sessions were going on, and though we didn’t take the time to advertise this as well as we could have, since it was mostly experimental, I feel it was very useful. We had a few people come and go, and then some recurring visitors, namely Brent Ludlow from Hooper Elementary, and Mrs. Durff from my Twitter network. I was just streaming off my dinky little webcam, but maybe next year we can install ManyCam or something on the presenters’ desktops and stream better presentations that way. Equipping the presenters with a wireless mic may be a good investment, too.

Some of the sessions have been uploaded to the BrainBlast WeberTube group. I’ll finish uploading the rest I was able to record soon.

Where Can We Improve?

As mentioned earlier, Moodle’s learning curve is too steep for some. Perhaps we should consider having two Moodle classes for next year’s BrainBlast, to cover all possible topics. I personally think William Rice’s Moodle Teaching Techniques would be an excellent resource to pattern any further training we do for Moodle. Another possibility would be to explore a lighter, simpler solution for the teachers who don’t want the vast functionality Moodle offers. More on that in an upcoming post.

As mentioned earlier, Moodle’s learning curve is too steep for some. Perhaps we should consider having two Moodle classes for next year’s BrainBlast, to cover all possible topics. I personally think William Rice’s Moodle Teaching Techniques would be an excellent resource to pattern any further training we do for Moodle. Another possibility would be to explore a lighter, simpler solution for the teachers who don’t want the vast functionality Moodle offers. More on that in an upcoming post.

Nothing is necessarily set in stone, but the current plan for BrainBlast 2009 is to have only two days of training rather than four, and join together the elementary and secondary teachers. In turn, we will increase the number of courses we offer, and expand the usage of the facilities to accommodate all the attendees at once. Frankly, I’m glad that we’re planning on this (I don’t think I can come up with four days worth of jokes about Vinnie again).

It seems that we’ve kind of stuck ourselves into a trend of providing only introductory classes. After spending time in the classes, and following some conversations with our presenters, I realized that the workshop-style subject material didn’t suit all the attendees. Some in the classes were already fairly proficient in Office 2007, PowerPoint, blogging, clickers, and the other technologies we offer in the district, and had to wait for other less-skilled but still eager-to-learn attendees to “catch up.” There wasn’t much room for our presenters to venture into advanced discussions of their topics.

It’s great that we’re offering training to those with such a wide range of skill sets, but at the same time, we need to find a way to group our teachers together based on their skills in particular technologies. It hardly makes sense to teach seasoned bloggers how to set up a blog, use the Dashboard, and write a simple post, when we could be teaching them about great widgets they can use, how to use RSS feeds, the importance of using a plugin like Slimstat to track visitor statistics, and using the blog as a platform to engage the educational community by leaving comments, using pingbacks, trackbacks, and growing your personal learning network.

So I hope that we can do something different this year. In lieu of “elementary” and “secondary” tracks, we could have two different types of classes: “beginner” and “advanced.” I think this would be a good move, as the teachers who want to explore the tools in more depth could do so. This way, those who are already proficient document camera users, SMART Board users, bloggers, Moodlers, Powerpointers, and so on, could learn more advanced tricks without having to wait for their less-proficient (but still eager to learn!) classmates to catch up.

A couple issues come to mind about this approach, though:

- How would we be able to estimate the number of classroom sizes? Could we guarantee full classrooms in all our advanced classes?

- We’d have to very clearly distinguish what an “advanced user” is, so everyone would be on roughly the same page in the class. We don’t want someone who thinks they’re qualified to join the “Advanced Podcasting” class struggling with basic things like recording in Audacity and posting MP3s on their blog. All attendees should be able to jump right into advanced topics without waiting too long for others. Perhaps a simple survey could help registrants determine which track they belong in.

And finally, maybe it’s just just me, but it seems silly that we have teachers going to a technology conference, sitting in a computer lab, and learning about cool web sites, only to write down the web links on paper. Why are we not showing them how to use social bookmarking sites like Delicious or Diigo (especially with the new Diigo accounts for Educators that was just released)? These sites let you store all your bookmarks online, and share them with others in your learning network. They could simply pull up their bookmarks in any class they’re in. Diigo is especially awesome, because it lets you also highlight text on your bookmarked web sites, so you can share specific snippets of text, point to especially relevant passages in long web pages, or even annotate them with your own comments.

I’m already looking forward to BrainBlast 2009. If the trend continues, it will be even more exciting and better than this year’s conference.