Update on the Moodle Survey

Last week I invited all our Moodle-using teachers and students to take a survey about their usage of Moodle as an online course management system and supplement to in-class instruction. The response has been great. So far (as of November 23, 9:30pm), 652 students and 17 teachers have participated. The survey was supposed to close today, but at the request of the teachers and students, I am leaving it open until November 30.

Last week I invited all our Moodle-using teachers and students to take a survey about their usage of Moodle as an online course management system and supplement to in-class instruction. The response has been great. So far (as of November 23, 9:30pm), 652 students and 17 teachers have participated. The survey was supposed to close today, but at the request of the teachers and students, I am leaving it open until November 30.

The survey questions assess four categories: (1) Participant Attitudes, (2) Collaboration, (3) Instructional Preparation, and (4) Instructional Delivery. There are a few goals I’m keeping in mind. One is to help determine the collaborative effect of our usage of Moodle. During the last BrainBlast, we taught a session to (almost) every secondary teacher in attendance: “Creating Collaborators with Moodle.” This begs the question, “Just how well are we currently using Moodle for collaboration?” Where can we improve? Our teachers have had the opportunity to put what they learned in the Moodle class into practice, so has the collaboration aspect improved? The survey addresses this.

Another goal is to address if Moodle actually reduces instructional preparation time. Our teachers have commonly criticized Moodle for being too time-consuming. This is a valid concern, and there are questions addressing the preparation time of the two major activities our teachers use in Moodle: assignments and quizzes. The delivery aspect of Moodle is important, too. Are students more engaged when they use Moodle? Is it easier for them to submit their homework? Does using Moodle save them time as well?

Originally, I was planning on correlating Moodle usage with academic performance, but after consulting with my professor, Dr. Ross Perkins, I realized that there are too many factors involved to even suggest some sort of link between the two. Moreover, the instruction itself is key, not necessarily the tool of delivery. What’s more, I think such a question starts crossing territory into more of a research project than an actual evaluation. It would be incredibly useful to research and establish best practices for online course delivery; the present scholarship in this area is huge and constantly evolving, but a lot of work remains to be done. However, it is outside the scope of this evaluation.

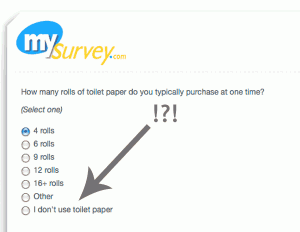

Most of the Moodle survey uses a Likert scale (Strongly Agree to Strongly Disagree) for the questions. There’s considerable debate over whether scales like this should employ a middle ground (e.g. Neither Agree nor Disagree) by providing an odd number of answers, or force participants to choose a position by providing an even number of answers. After tossing the two options back and forth in my mind, I decided on providing a middle option for the questions. I’m wondering now if that was a mistake. I believe it introduces a degree of apathy into the survey that, in hindsight, I didn’t really want. A lot of the participants are choosing “Neither Agree nor Disagree” for some of their answers, when I suspect they probably mean “Disagree.”

Ultimately, though, the data are still valuable. Apparently, most students do not feel that they are given many opportunities to collaborate in Moodle. Teachers generally agree that they don’t bother to provide these opportunities for collaboration. Perhaps as a follow-up, I should ask the teachers why they don’t provide collaborative opportunities, especially those teachers who attended the BrainBlast session.

The data also indicate that both students and teachers generally agree that Moodle saves time in both preparation and instruction. The disagreements here come mainly from math teachers who complain about Moodle’s general lack of support for math questions (DragMath is integrated into our Moodle system, but this isn’t enough), and the inability to provide equations and mathematical formulas in quiz answers. This is undoubtedly worth further investigation. I came across a book on Amazon (Wild, 2009) that may provide some valuable insights.

The data also indicate that both students and teachers generally agree that Moodle saves time in both preparation and instruction. The disagreements here come mainly from math teachers who complain about Moodle’s general lack of support for math questions (DragMath is integrated into our Moodle system, but this isn’t enough), and the inability to provide equations and mathematical formulas in quiz answers. This is undoubtedly worth further investigation. I came across a book on Amazon (Wild, 2009) that may provide some valuable insights.

The participants are prompted to provide open-ended comments as well. Answers fell all across the spectrum, from highly positive (e.g. “I love taking online courses; it’s easy, organized, and efficient…”) to extremely negative (e.g. “I hate everything about it; online schooling is the worst idea for school…”). The diverse answers will be addressed in the final report.

The survey isn’t the only measurement tool I’m using. I’m also going to employ a rubric to assess each online course represented in the survey. There are few enough (in this case, about 20) that this is a reasonable amount to assess within the given time. After that, I just have to write up the final report, create some graphs of the data, analyze the results, and provide recommendations.

References

Wild, I. (2009). Moodle 1.9 Math. Packt Publishing.

Leave a Reply